Put away your Machine Learning hammer, criminality is not a nail

Nov 29, 2016 · 4 minute read · Comments(This piece was originally posted on Medium)

Earlier this month, researchers claimed to have found evidence that criminality can be predicted from facial features. In “Automated Inference on Criminality using Face Images,” Xiaolin Wu and Xi Zhang describe how they trained classifiers using various machine learning techniques that were able to distinguish photos of criminals from photos of non-criminals with a high level of accuracy.

The result these researchers found can be interpreted differently depending on what assumptions you bring to interpreting it, and what question you’re interested in answering. The authors simply assume there’s no bias in the criminal justice system, and thus that the criminals they have photos of are a representative sample of the criminals in the wider population (including those who have never been caught or convicted for their crimes). The question they’re interested in is whether there’s a correlation between facial features and criminality. And given their assumption, they take their result as evidence that there is such a correlation.

But suppose instead you start from the assumption that there isn’t any relationship between facial features and criminality. In place of this question, you are interested in whether there’s bias in the criminal justice system. Then you’ll take Wu and Zhang’s result as evidence that there is such bias — i.e., that the criminal justice system is biased against people with certain facial features, thus explaining the difference between photos of convicted criminals and photos of people from the general population.

The authors obviously never thought of this possibility.

Unlike a human examiner/judge, a computer vision algorithm or classifier has absolutely no subjective baggages, having no emotions, no biases whatsoever due to past experience, race, religion, political doctrine, gender, age, etc., no mental fatigue, no preconditioning of a bad sleep or meal. The automated inference on criminality eliminates the variable of meta-accuracy (the competence of the human judge/examiner) all together.

So humans are prone to bias, but this machine learning system isn’t? Despite the fact that the creation of the data set on which the system was trained had (biased) humans involved every step of the way, from the arrest to the conviction of each individual in it? The fact that the researchers didn’t notice this gaping hole in their logic is disconcerting to say the least. Even worse is that they seem to suggest that we should deploy a system like this in the real world. To do what exactly, the authors don’t say, but it probably wouldn’t be about targeting the right advertising at today’s discerning criminals. In the case of advertising, a false positive — an innocent person being identified as a criminal — wouldn’t have serious consequences. In more likely scenarios in which the system might be deployed, however, a false positive could have much worse results, e.g., unwarranted scrutiny of people who have done nothing wrong, or even worse, arrests of innocent people.

Coverage of this paper has drawn parallels with the movie Minority Report, where so-called pre-cogs have prior knowledge of crimes that will be committed in the future. But this comparison misses a crucial point. In the movie, the prediction made by the pre-cogs is always in relation to a particular crime’s being committed by a particular individual at a specified time in the future. And, as the movie suggests, it’s ethically problematic to arrest someone before they’ve actually committed a crime. But in the Wu and Zhang paper it’s even worse because a prediction amounts to nothing more than a statement such as, “this person’s features bear some similarity to the features of a lot of people who have been processed by the criminal justice system.” It says nothing whatsoever about whether this particular person has ever committed a crime.

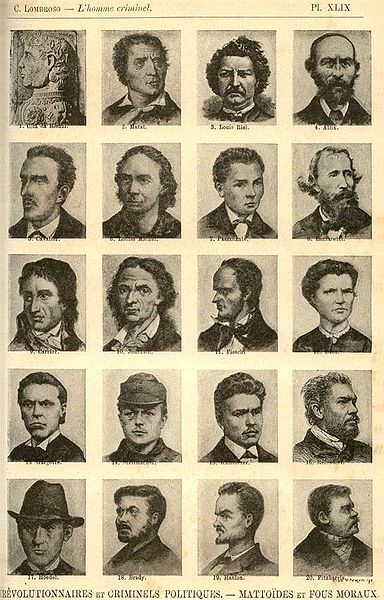

What is criminality? The state of having committed a crime? The tendency to commit crimes? Cesare Lombroso, the 19th century Italian criminologist who put forward the theory that born criminals could be identified by congenital defects, coined the term “criminaloid,” to describe a different type of criminal from a born criminal. A criminaloid was someone who just occasionally committed crimes, but did not have the physical features of a born criminal. Presumably this category was necessary in order to account for individuals who had been caught committing crimes but who did not have congenital defects. Even if you buy into such an idea of born criminals, surely there are also some people who have the facial features of criminals, yet who have never committed a crime. Should they be treated as though they have? Wu and Zhang don’t bother asking such questions, and their machine learning algorithms certainly won’t yield answers to them.

Being proficient in the use of machine learning algorithms such as neural networks, a skill that’s in such incredibly high demand these days, must feel to some people almost god-like — even Thor-like! Maybe every single categorization task will start to look like a nail for Thor’s hammer. Cat pictures and handwritten digits are fair game as “nails.” Criminality is not.