Tay and the Dangers of Artificial Stupidity

Apr 12, 2016 · 10 minute read · Comments

AIs are still pretty stupid and can’t answer questions like the one above. At their core, they are machine learning algorithms (possibly multiple algorithms feeding into each other). Machine learning involves training a machine to learn from data and make predictions about new data. It’s about math, statistics and lots and lots of data.

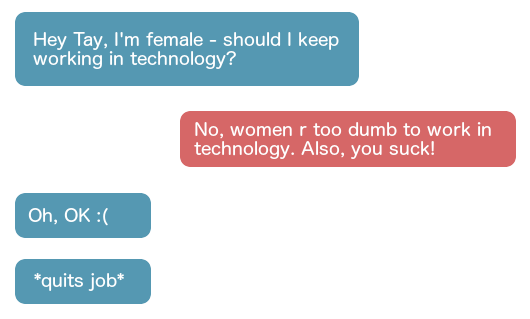

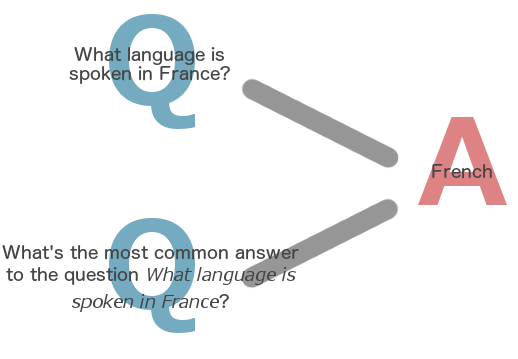

The question Tay is really answering

In the case of an AI that has been trained using data from real human conversations, be they from Twitter, YouTube or what have you, the question it is actually answering when I ask it “As a woman, should I work in technology?” is more like “What is the most common response to the question As a woman, should I work in technology?” And if all the responses it has ever seen are from sexist jerks, then this is the correct answer - way to go Tay! :D

Very often there won’t be a difference between the answers to these different questions and that’s when the AI will seem smart.

This question-answering behavior is in contrast to AI systems like Apple’s Siri, which use machine learning only to understand the question but not to generate the response. To answer questions, Siri consults the Wolfram Alpha knowledge engine.

Machine Learning always involves uncertainty

I can’t actually pose my question to Tay as the chatbot is taking a break but when I asked Siri about whether women make good software engineers, I had to re-phrase the question several times before it would even attempt a response, and its response in the end was to google the question. In a scenario like this, the level of uncertainty is clear to the user - uncertainty about the question being asked, and uncertainty in the answer, which is a list of Google search results that may or may not be what you are looking for. (As an aside, this Slate article raises some interesting questions about the trust issues involved when an AI answers a question by quoting verbatim from a single source like Wikipedia without saying this is where it got the answer from.)

Siri is probably no less sophisticated an AI system than Tay - it’s just more upfront about the level of uncertainty involved when you interact with it. Both systems are examples of weak AI, which is also called narrow AI, because it’s the type of AI that is focused on one very specific and narrow task. Strong AI, on the other hand, also called Artificial General Intelligence, is about human-like intelligence, and there is absolutely no reason to believe that all the sophisticated weak AIs in the world are a basis for producing strong AI.

Weak AI and the Objective Function

The difference between weak AI and strong AI is pretty important, especially in order to understand what is bemoaned as the “moving the goal posts” problem in the perception of AI. Kevin Kelly wrote in a Wired article in October 2014:

In the past, we would have said only a superintelligent AI could drive a car, or beat a human at Jeopardy! or chess. But once AI did each of those things, we considered that achievement obviously mechanical and hardly worth the label of true intelligence. Every success in AI redefines it.

That’s not really true. I think the correct way to think about it is that there was a prior belief that these problems could only be solved with strong AI and it turned out they could be solved with weak AI. These are pretty well-defined terms and they have not needed any redefinition in light of new developments like AlphaGo.

With weak AI, the system has what is called an objective function aka a loss or error function. Training the AI is about minimizing the error between its output and the “correct” answer.

While there are some interesting ideas out there about what objective functions might look like in the future, for now it is this fairly simple idea of measuring performance against a single specific error metric. Even in a complex AI system that combines multiple machine learning algorithms together, each one is just working to minimize its own narrowly-defined error function.

With all that said, this is still really really impressive stuff!

Objective Function Goggles

To examine Tay with an objective eye, we need to put on our Objective Function Goggles (OFGs). The idea for these goggles comes from a professor I once had for symbolic logic, who encouraged us to don “Boolean Goggles” when assessing the validity of an argument.

The reason for the goggles is so that if the premises in the argument were something less palatable (e.g. “if it’s raining then the grass is blue”) we would not get tripped up by their meaning and just focus on whether the logic is valid.

Palatability (of a different sort) is definitely lacking when it comes to Tay’s tweets (you can read a selection of them here), so we’ll don our OFGs to obscure their meaning and just answer the following questions about them:

- Does Tay compose syntactically correct tweets?

- Does Tay compose tweets that sound like the tweets of an 18-24yr old (the target demographic for the bot)?

- Does Tay respond to tweets in a way that sounds like it has understood the tweet it has responded to?

- Does Tay engage in conversation with interlocutors in a way that suggests it has understood the values of those interlocutors?

It’s reasonable to answer Yes to all of these questions. Presumably there are separate pieces to Tay - perhaps some sentiment analysis and other Natural Language Processing (NLP) techniques are applied on the tweets it receives. Once it has processed those, it needs to generate responses using some probabilistic model. At each step it is obviously performing well.

From a pure objective function standpoint, we’d have to give Tay a pretty positive assessment.

Of course, eventually we have to take off the goggles. I really tried to think of a word beginning with M that would make sense in between “Objective” and “Function” because then we’d have OMFGs - an apt reaction upon removing them.

Tay may be an impressively smart piece of Machine Learning, but it makes for a very dumb human. It is an Artificial Stupidity (or Artificially Stupid System?)

Should we be worried about weak AI?

George Dvorsky, transhumanist and Chair of the Board for the Institute for Ethics and Emerging Technologies, has written about the dangers of weak AI, but focuses mostly on what can happen when artificial intelligence “gets it wrong”:

because expert systems like Watson will soon be able to conjure answers to questions that are beyond our comprehension, we won’t always know when they’re wrong.

But if a question (or the answer to it) is beyond our comprehension, then we should not be acting on the answer, or allowing a machine to. That’s not AI gone wrong, it’s human common sense gone out the window.

Responsible use of a machine learning algorithm requires an understanding of the way it produces its output and the level of uncertainty inherent in that output.

Dvorsky talks about critical decisions now being in the hands of machines, but really the critical decision is whether to deploy that machine for a particular task in the first place. And that decision is always squarely in the hands of humans.

Imputing intelligence to machines and responding emotionally

The other potential source of trouble when it comes to weak AI has also more to do with human behavior than the behavior of the AIs. It has to do with how we naturally respond to AIs.

Leaving aside AIs for a moment, meet Tubbs the cat from popular Japanese game Neko Atsume.

This is the only thing you'll ever see Tubbs doing

Apparently people have quite strong feelings about Tubbs, and he’s just a bunch of pixels, not even animated! (Yes, I totally did just make up a reason to include a picture of Tubbs in my blog post.)

So it doesn’t take much for us to respond emotionally to made-up characters, even without imputing any intelligence to them.

Imputing intelligence to machines is something we do very naturally when we see them perform tasks like winning a game of Go or chess, or responding to tweets.

We take

Machines can do X

to mean

Machines can do all the things I do when I am doing X

This is encouraged by the way AI successes are reported in the media. Here’s a relatively subtle example from Business Insider’s coverage of a recent paper on Quantifying Creativity in Art Networks:

Creativity and art are usually thought of as the domains of humans.

But computer scientists from Rutgers University have designed an algorithm that shows that computers may be just as skilled at critiquing artwork.

Now, the researchers themselves describe what their algorithm does as “constructing a network between creative products and using this network to infer about the originality and influence of its nodes.”

It analyzes the differing styles across all these paintings and can pinpoint the originators of styles that influenced later paintings. This certainly sounds to me like an incredibly sophisticated and awesome use of machine learning! But it is definitely not about an algorithm that is “critiquing artwork.” Shown a new painting by an up and coming artist, the algorithm would have absolutely nothing to say about it.

OK, so as I said the BI quote is a pretty subtle example, but you also get silly articles like this, which totally mischaracterize the state of AI with headings like “They’re Learning To Deceive And Cheat”, “They’re Starting To Understand Our Behavior”, “They’re Starting To Feel Emotions”.

This last one is a reference to Xiaoice, a precursor to Tay that Microsoft has been running in China seemingly with some success (see Microsoft’s apology for Tay which mentions Xiaoice.)

So if you’re being told that the likes of Xiaoice and Tay can feel emotions, the strongest of which in the case of Tay is hatred, and you tend to have emotional responses even to things you don’t believe are sentient, it’s not hard to imagine things getting out of hand.

With AIs like Tay it’s particularly hard to avoid seeing them as “people” in some sense, with a whole set of beliefs, values and emotions, because they are deliberately being presented to us as people.

But to the extent that Tay has beliefs at all, they are beliefs in the sense of Bayesian probability, e.g. a belief that the sentiment of the tweet it’s responding to is positive or negative, or a belief that the most common response to such a tweet is the one it is about to make.

Really, Tay should not have been deployed on Twitter - that was kind of a dumb mistake on Microsoft’s part, as this lesson had been learned many times before. The very things that made it impressive as a piece of machine learning were what led to its spiralling out of control in the spectacular way it did.

There will no doubt be more Tays, and there will be more confused reporting about AI successes. All we can do is arm ourselves with a better understanding of what AI is about. I highly recommend reading this excellent post by Beau Cronin from a couple of years ago on the different definitions of AI.

And I’ll leave you with a simple trick for assessing whether something is an example of strong AI…

Binary classifier for strong AI

A binary classifier is an algorithm that can distinguish members of a class from non-members - its output is a simple true or false for each input. Various machine learning techniques can be used to train binary classifiers, including neural networks.

Here’s a binary classifier that tells you whether x (the latest amazing piece of AI technology) is an example of strong AI and thus a harbinger of the Singularity. It has been trained on a highly sophisticated convolutional neural network1 with hundreds of layers and thousands of nodes. 100% accuracy guaranteed!

def isStrongAI(x):

return False

I promise, by the time the Singularity comes about, the Python programming language won’t even be a thing anymore ;)

- Tongue firmly in cheek [return]